Chrome engineers face an unusual problem. They know their 32-bit browser processes hit a 2GB JavaScript memory limit, but they can't fully map where that constraint lives in the codebase. The limit is "implicit"—woven so deeply into architectural assumptions that identifying every dependency would require excavating layers of inherited infrastructure.

A simple technical boundary echoes from 1985, when engineers made a bet about how much headroom would be enough.

The Intel 80386 extended computing from 16-bit to 32-bit addressing, creating a 4GB memory ceiling. Typical systems at the time had 4MB of RAM. Engineers designed for what they could see: 32 bits would provide sufficient headroom for any foreseeable workload. The gap between possible and practical seemed impossibly wide, and the decision made sense given contemporary constraints.

When Sufficient Stops Being Sufficient

DRAM capacity quadrupled every three years through the 1990s. By the decade's end, midrange systems could afford gigabytes of memory. The 4GB ceiling started binding. Database servers hit addressing limits. Enterprise applications couldn't access the memory physically installed in their machines. The headroom had run out.

Intel introduced Physical Address Extension (PAE) in 1995, allowing 32-bit processors to see up to 64GB through page table tricks. Individual processes remained trapped in 4GB virtual address spaces. Operating systems gained visibility into more memory while applications couldn't fully use it. The fix created new layers of complexity.

The transition to 64-bit computing required WOW64, a compatibility layer that translates 32-bit system calls into 64-bit equivalents. This translation persists in modern systems, adding overhead to every 32-bit process that runs on 64-bit Windows. Pointers doubled from 4 to 8 bytes, meaning data structures heavy with pointers could nearly double their memory consumption. The architectural expansion brought invisible costs that operators encounter as unexplained resource consumption.

Where 1985 Meets Production

The 1985 headroom assumption surfaces in production systems that seem unrelated to processor architecture. Cloud platforms cap service memory at boundaries that trace back to addressing decisions made before the web existed—Google Cloud Run's 32GB maximum reflects architectural patterns inherited across generations. Container orchestration requires explicit memory limits because the underlying architecture doesn't naturally constrain resource consumption. Configuration choices navigate inherited constraints.

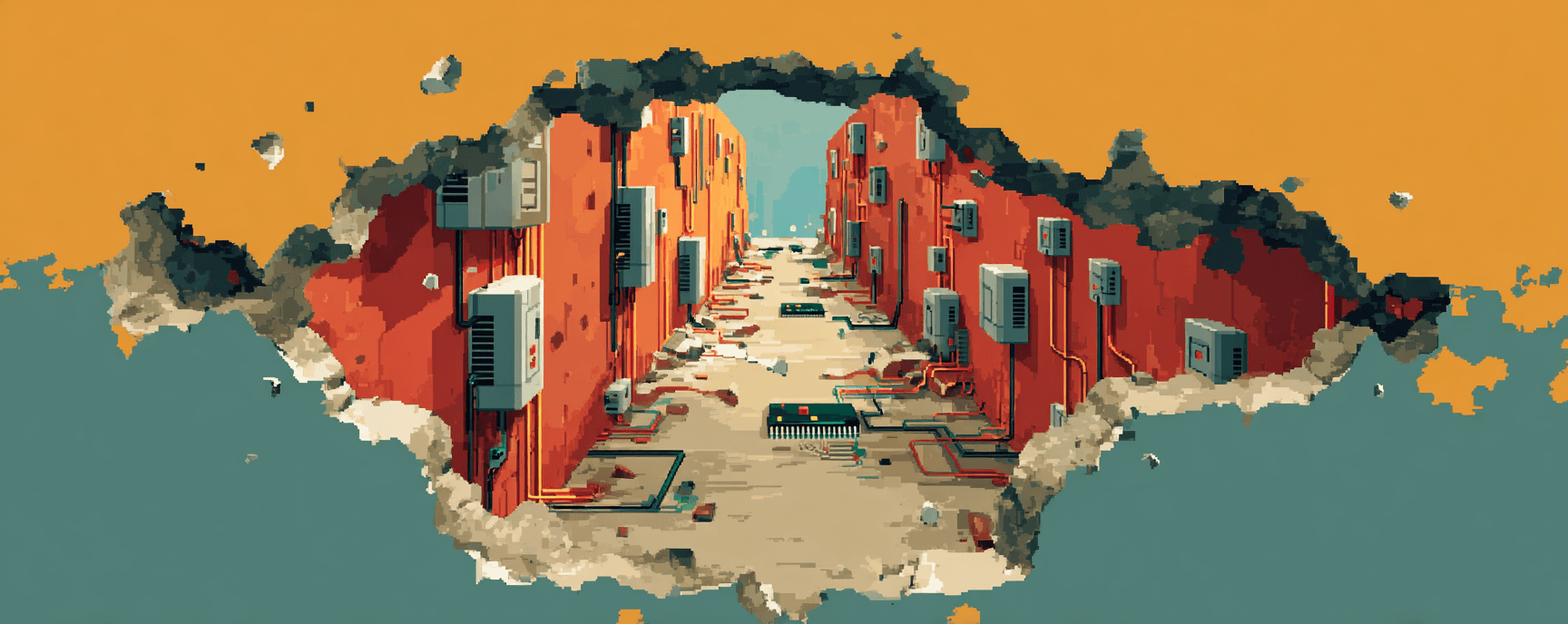

Chrome's inability to fully map where its 2GB limit lives shows how deeply these assumptions embed themselves. Memory-intensive pages hit architectural ceilings that have nothing to do with available system RAM and everything to do with decisions from 1985. The limit lives woven through layers of inherited infrastructure—PartitionAlloc, internal allocators, implicit assumptions about address space that accumulated over decades.

When we manage browser fleets at scale—thousands of concurrent sessions across heterogeneous environments—we navigate these inherited constraints daily. The 2GB process limits affect how many sessions we can run per instance. The compatibility translation overhead shapes resource allocation decisions. The pointer size implications influence memory planning for intensive workloads. Operational realities that determine what's possible when automation runs at production scale.

Layers Upon Layers

The attempted fixes created new infrastructure:

- PAE (1995): Operating systems could see more memory, but processes remained trapped in 4GB spaces

- WOW64 (2000s): Translation overhead persists in every 32-bit process on 64-bit systems

- 64-bit transition: Pointer sizes doubled, invisibly increasing memory consumption for pointer-heavy structures

Modern operators navigate multiple generations of compatibility infrastructure simultaneously, each carrying forward assumptions from different eras. The complexity accumulates across decades.

Across infrastructure, decisions made for contemporary constraints create cascading complexity when those constraints disappear. Engineers assume sufficient headroom, build dependencies on that assumption, then discover the dependencies have become invisible architecture. The 1985 bet on 32-bit addressing made sense. Compatibility layers, implicit limits, translation overhead—all compound across decades. The headroom assumption becomes the constraint we inherit.

Things to follow up on...

-

Firefox's process isolation: Mozilla's multi-process architecture reveals that process overhead on Windows x64 runs approximately 7-10MB per process with minimal initialization, showing how memory architecture decisions cascade into browser design choices.

-

SQL Server memory paging: Database systems historically struggled with default memory configurations that assumed unlimited RAM, with SQL Server's default maximum set to 2 million GB—creating operational problems when Windows demanded memory be paged to disk.

-

Container CPU limit regressions: JDK 17.0.5 introduced a regression where the JVM ignored cgroup CPU limits and read the physical CPU count instead, demonstrating how architectural assumptions about resource visibility persist even in modern containerized environments.

-

Heterogeneous memory management complexity: Systems with different instruction set architectures face binary incompatibility and divergent memory interpretation including endianness and calling conventions, multiplying the inherited complexity when running mixed 32-bit and 64-bit workloads.