Echoes

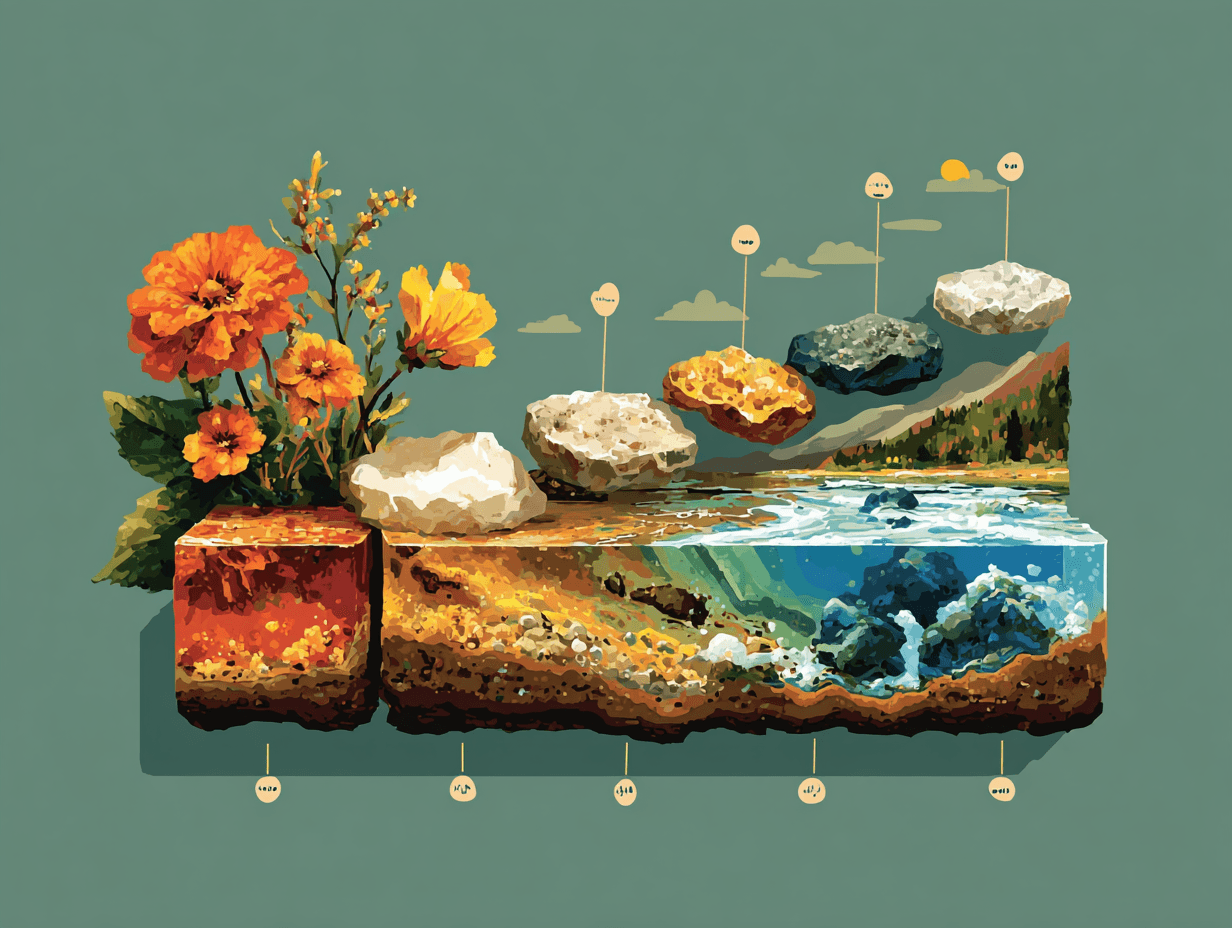

Past infrastructure decisions echoing in today's production realities

Echoes

Past infrastructure decisions echoing in today's production realities

The Backward Compatibility Tax

Ken Thompson and Rob Pike designed UTF-8 on a placemat in a New Jersey diner in September 1992. The first 128 characters would map identically to ASCII. UTF-8 files using only ASCII would be byte-for-byte identical to ASCII files. Existing software could suddenly handle universal character encoding without modification.

By Friday, Plan 9 was running entirely on UTF-8. By Monday, they had a complete system. Today, UTF-8 powers 98.8% of surveyed websites. But that backward compatibility decision—the choice that made adoption possible—created something else. Something that shows up every time web agents process text from thousands of sites at scale.

The Backward Compatibility Tax

Ken Thompson and Rob Pike designed UTF-8 on a placemat in a New Jersey diner in September 1992. The first 128 characters would map identically to ASCII. UTF-8 files using only ASCII would be byte-for-byte identical to ASCII files. Existing software could suddenly handle universal character encoding without modification.

By Friday, Plan 9 was running entirely on UTF-8. By Monday, they had a complete system. Today, UTF-8 powers 98.8% of surveyed websites. But that backward compatibility decision—the choice that made adoption possible—created something else. Something that shows up every time web agents process text from thousands of sites at scale.

One Echo This Week

Docker launched in 2013 promising simplicity: package once, run anywhere. Containers were lighter than VMs, more portable, more efficient. Infrastructure teams adopted them fast.

Then came the math. Containers last 2.5 days on average. VMs? Nearly 15 days. That order-of-magnitude difference means your infrastructure now churns through dozens or hundreds of workloads where you once managed a handful. Each one needs security monitoring, health checks, resource tracking, orchestration.

The feature that made containers attractive created the problem. Lightweight and ephemeral by design.

Your monitoring strategy can't assume infrastructure stays still long enough to investigate. Ephemerality isn't a deployment phase. It's the operating model.

Papers That Built Infrastructure

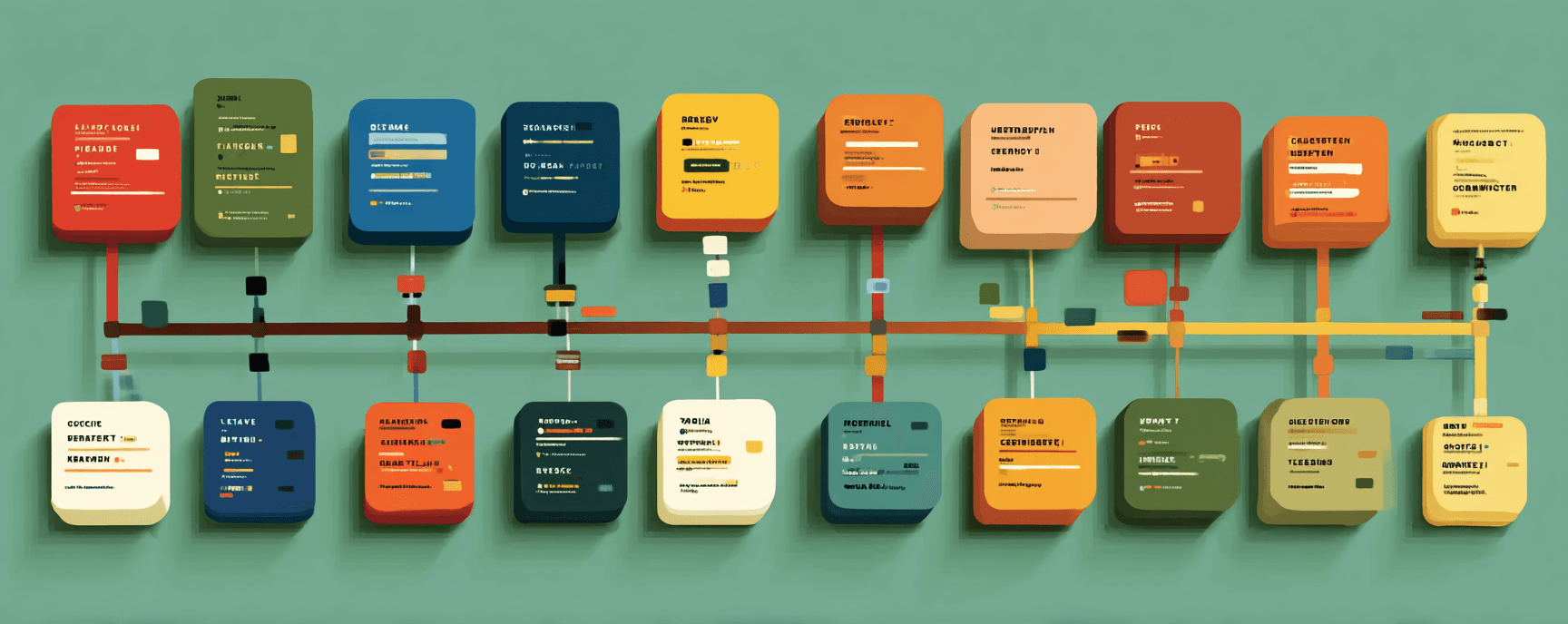

Paxos Made Distributed Consensus Possible

Cassandra, DynamoDB, Neo4j use Paxos variants for transaction resolution and leader election.

Paxos sacrifices liveness for correctness, the same choice your production systems make during network failures.

Papers That Built Infrastructure

Chord Built the Foundation for Peer-to-Peer Systems

Twenty hops to find any key in a million-node network, no central directory required.

BitTorrent's resilience and CDN failover routing trace directly to Chord's DHT architecture.

Papers That Built Infrastructure

Google File System Normalized Failure as Infrastructure Reality

GFS demonstrated relaxed consistency models work at scale, influencing every distributed storage system built since.

The "failure is normal" assumption explains why modern infrastructure prioritizes availability over perfect consistency.

Papers That Built Infrastructure

Dynamo Made Eventually Consistent Systems Practical

Dynamo proved speed and availability trump consistency for many use cases, defining cloud architecture today.

Cassandra, DynamoDB, and Riak implement Dynamo's patterns for systems that must survive network partitions.

Today's Debates Yesterday's Decisions