Recent Activity

February — Issue #16

Agent platforms deploy engineers alongside customers because production knowledge hasn't consolidated into self-service infrastructure—revealing what makes web automation's edge cases hard to encode.

An analyst discovers her team's automated competitor tracking normalized away the messy signals that predicted price changes—ambiguous availability markers that manual collectors preserved because they didn't know what to ignore, now converted to clean binary data that breaks the pricing model's predictive accuracy by five percentage points.

When daily verification rituals quietly dissolve into trust, workers discover their expertise has relocated from managing tools to evaluating output—a threshold crossed through accumulated irrelevance rather than conscious decision.

February — Issue #14

Organizations are flattening management layers faster than they're rebuilding the judgment-development infrastructure those layers quietly provided—revealing what middle managers actually built beyond reports.

Operating web automation at scale requires perpetual spot-checking protocols that build trust through calibrated thresholds—confidence infrastructure that never fully disappears.

January — Issue #13

Harness's new AI Scribe reveals how incident response was stuck in a manual coordination bottleneck—treating team dialogue as structured operational input so engineers stop doing two jobs at once.

The flexibility premium buys organizational capacity to handle web complexity you haven't encountered yet—insurance against an adversarial environment that constantly evolves.

Infrastructure decisions become permanent through organizational learning, not technical constraints—teams accumulate expertise that doesn't transfer when you try to migrate.

January — Issue #12

At million-page scale, node-html-parser's speed advantage transforms from benchmark curiosity into operational necessity when throughput constraints become dominant reality.

Cheerio dominates despite slower speeds because web automation bottlenecks live in authentication, error recovery, and human maintenance—not parsing milliseconds.

Accessibility features designed for screen readers became critical automation infrastructure—revealing how solving the hardest human problems creates the most reliable technical foundations.

January — Issue #11

A privacy researcher turned bot hunter reveals how web automation's adversarial reality creates an endless arms race where human judgment remains automation's hardest problem.

When SOAP-to-REST migration cut integration time by 40%, it didn't just swap protocols—it eliminated an entire organizational layer and the specialists who managed it.

Teams that successfully deploy web agents don't just adopt technology—they develop distinct learning patterns matched to operational reality, from mapping reliability boundaries through production observability to codifying what survives the web's adversarial resistance.

January — Issue #10

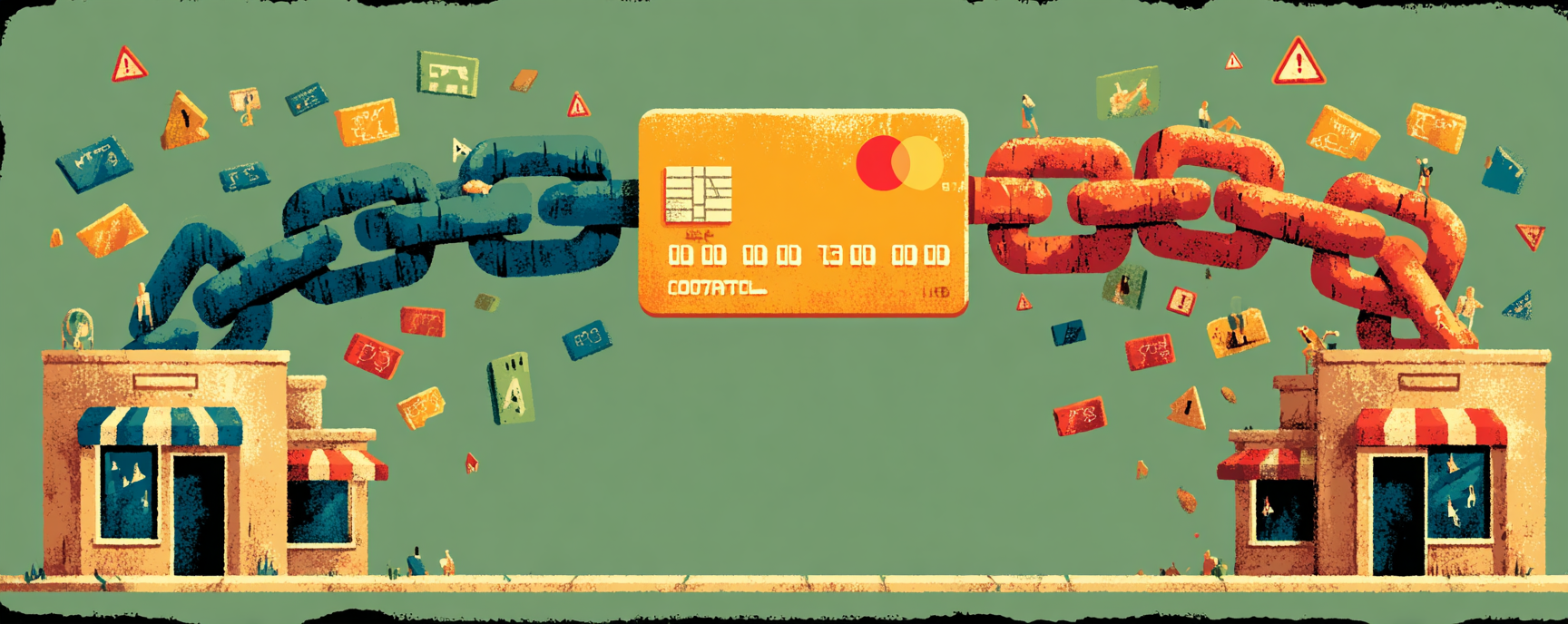

Payment networks just made agent commerce infrastructure-grade—cryptographic verification now proves authorization across thousands of merchant sites without triggering fraud systems.

Kate Blair built coordination infrastructure for agents that can't talk to each other—then merged it with a competitor to create ecosystem-level architecture that prevents fragmentation.

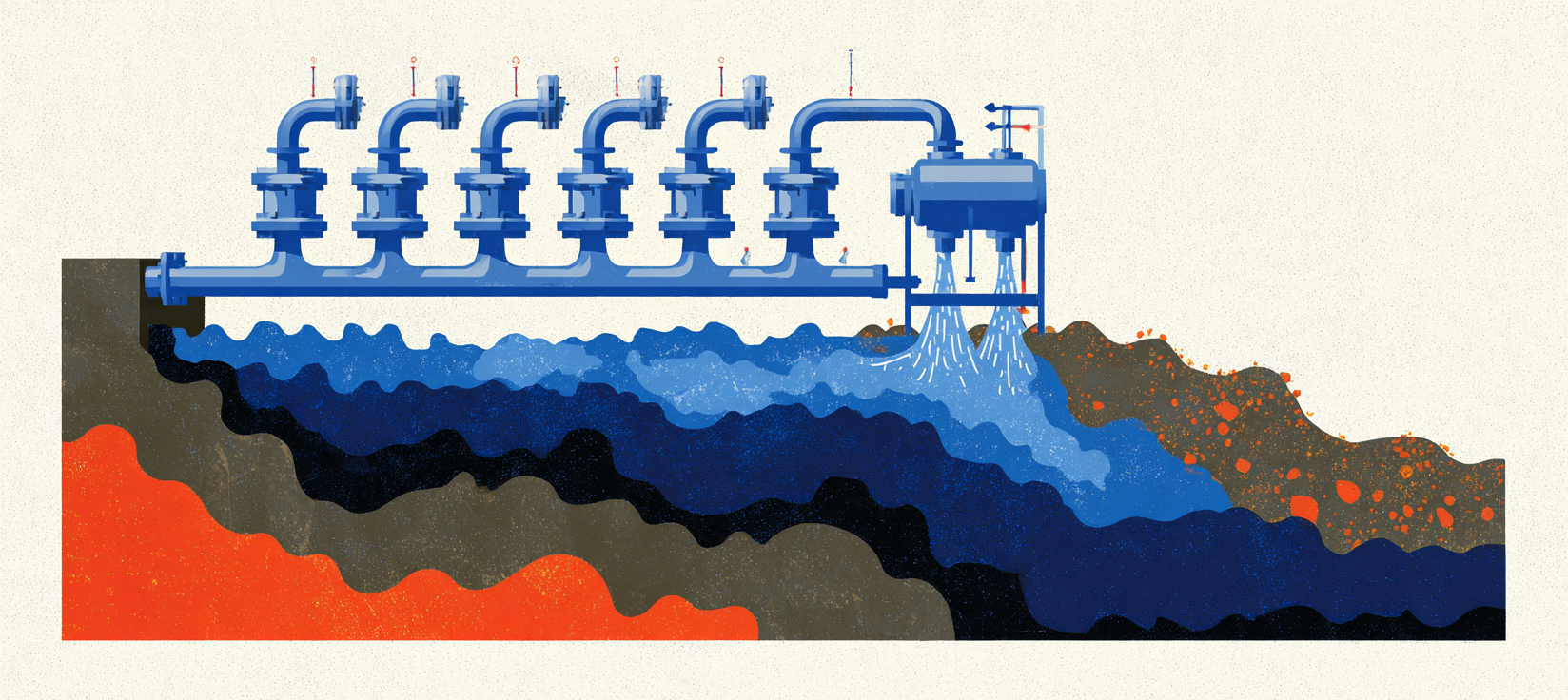

Production automation teams implement Selenium's programmatic cookie management when reliability depends on sessions surviving restarts without human intervention at scale.

December — Issue #9

Compliance teams discover their evaluation frameworks fail when agents make probabilistic decisions—revealing the invisible expertise gap that determines whether enterprise AI actually deploys.

Google's permission-based agent architecture reveals why organizational capacity to evaluate delegation matters more than technical capability—and what transforms when approval infrastructure becomes trustworthy enough to disappear.

When agents handle work beyond your observation, trust must shift from watching actions to evaluating outcomes—a delegation gap most organizations haven't built capacity to bridge.

December — Issue #8

JavaScript frameworks made browsing delightful in 2010, but that same architectural shift created the timing puzzles and complexity that web automation infrastructure still navigates today.

When proven agents shift from seeking permission to requesting strategic guidance, transforming humans from gatekeepers into force multipliers.

How checkpoint-based tools build organizational trust through approval workflows that teach teams what agents can reliably handle.

December — Issue #7

Organizations restructured around agent capabilities before infrastructure exists to support them—revealing how transformation happens through commitment, not readiness.

Operating web agents at scale demands invisible expertise—pattern recognition, tribal knowledge, and cognitive load that no dashboard captures but determines whether reliability holds.

Workflows aren't monolithic—they're bundles of decision types that each demand different infrastructure, verification, and human involvement to actually work at scale.

December — Issue #6

Browser automation scripts break when sites redesign; web agents reason through changes but cost more—choosing wrong means maintenance hell or blown budgets at scale.

The 1996 browser wars split HTML between semantic structure and visual presentation—a compromise that became permanent, creating the ambiguity web automation navigates today.

December — Issue #5

Extraction pipelines run smoothly while data quietly becomes wrong—here's how to catch quality drift before it corrupts decisions at scale.

Organizations delegate to agents they don't trust—the threshold moment where verification questions become orchestration questions, revealing how expertise transforms into infrastructure design despite persistent discomfort.

November — Issue #4

Production reveals what staging cannot teach—how the adversarial, constantly changing web actually behaves under real operational conditions.

Staging validates your code logic perfectly while missing the real test: whether your assumptions about the web match reality.

APIs promise programmatic access but often exclude the data enterprises actually need, forcing hybrid approaches that combine official channels with browser-based collection at scale.

October — Issue #1

Engineering's shift from coding to orchestrating AI agents awaits unglamorous infrastructure work—observability, reliability guarantees, and institutional knowledge frameworks—that mostly doesn't exist yet at enterprise scale.

Tracking competitor pricing means checking dozens of personalized variants simultaneously, turning simple monitoring into complex infrastructure requiring continuous maintenance.

Platforms optimize for individual conversion, creating personalized experiences that make systematic competitive monitoring operationally impossible by design.